219 Design often uses internal projects to explore emerging technology or to hone our skills. Last year, we decided to explore computer vision, a field of artificial intelligence that trains computers to interpret and understand the visual world. This experiment ultimately became our Tool Recognizer, an app to help us better organize the office. Here are a few key takeaways from my experience.

Tool Recognizer Application Overview

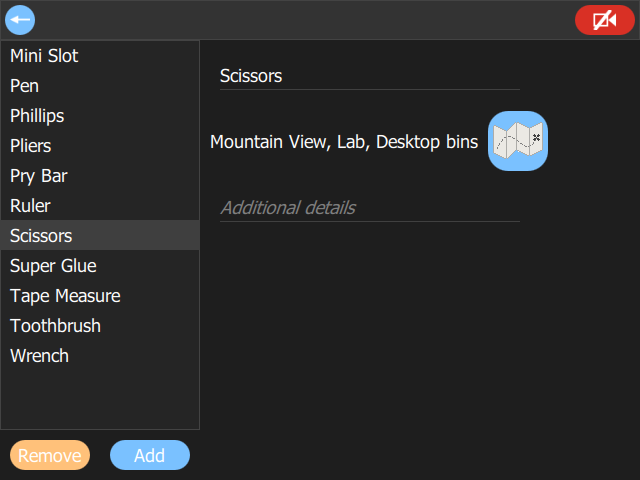

Our offices house multidisciplinary teams that use a wide variety of discipline specific tools. These tools are often left around the office, especially when things get busy. We needed a way for people, without discipline specific experience, to identify tools and put them where they belong. That is the concept behind the Tool Recognizer. When you find something you don’t recognize, you simply scan it and the application will tell you what it is and show you exactly where it belongs.

The Core Technology

- OpenCV for image capturing

- Tensorflow for machine learning

- Qt and QML for the user interface

Creating the Tool Recognizer with Computer Vision

Challenge: Find a useful, limited scope, internal project based on computer vision.

Solution: We ultimately came up with the idea for the tool recognizer, but it didn’t begin that way. Among other ideas, we thought about creating a ‘Pizza Recognizer.” Putting away tools that had been left out was obviously a more pressing issue.

The Concept:

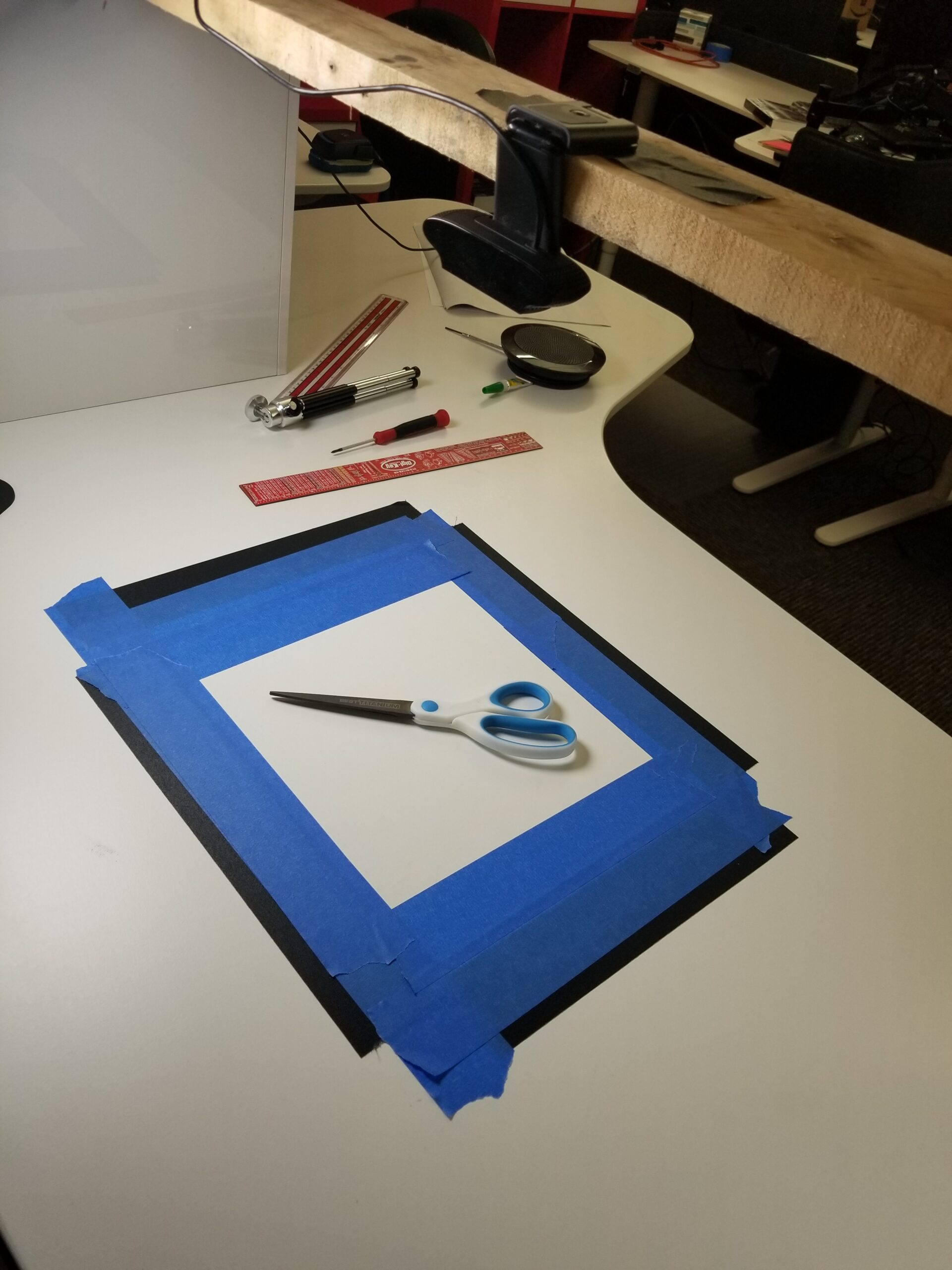

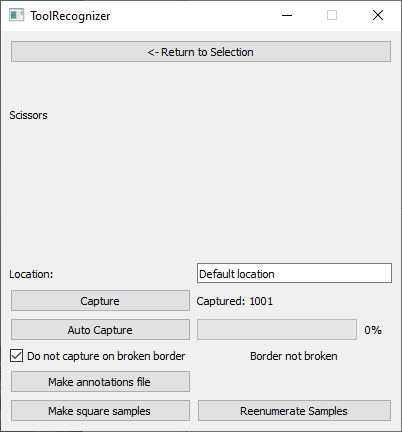

- Bring a tool to the scanning station – a plain surface with a camera pointed at it

- Take a picture using the always-running app

- The app uses the picture to identify the specific tool (not just “a screwdriver” but a specific type of screwdriver)

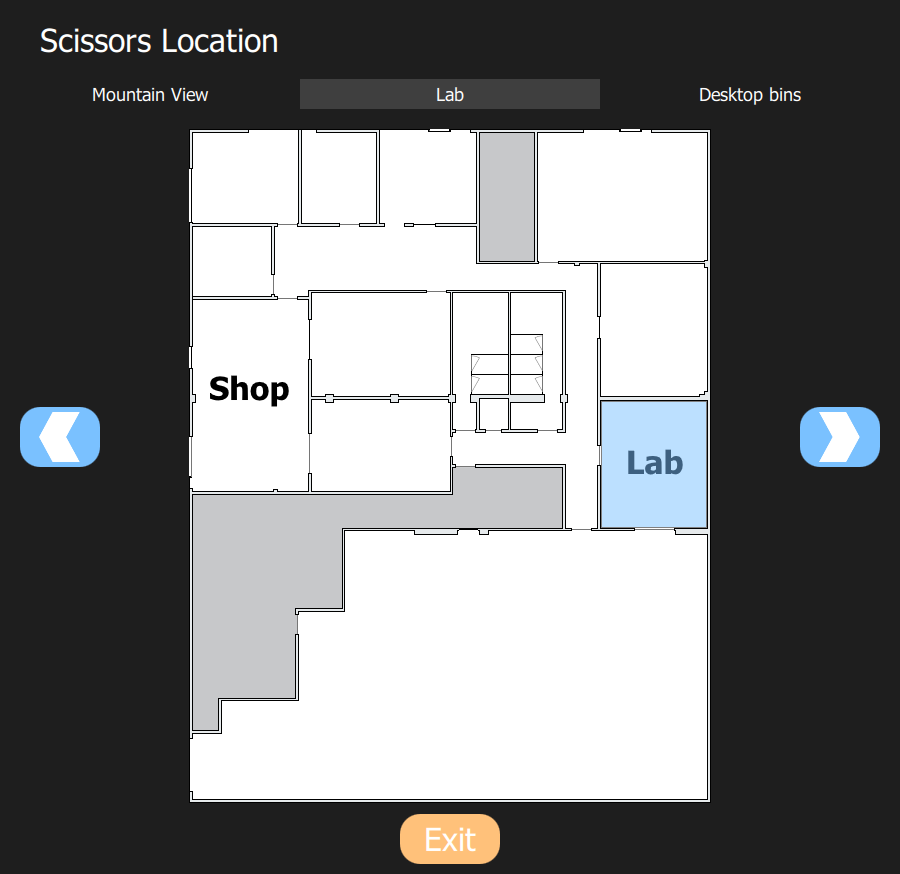

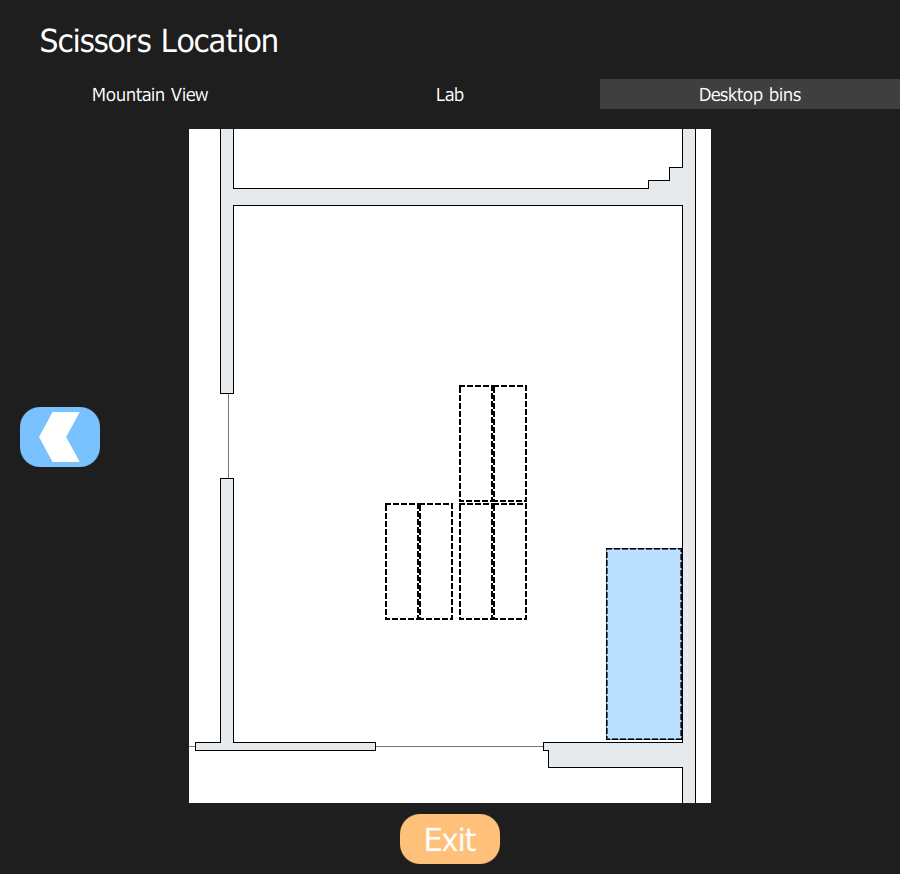

- The app also shows you where that tool belongs using a maps of the offices

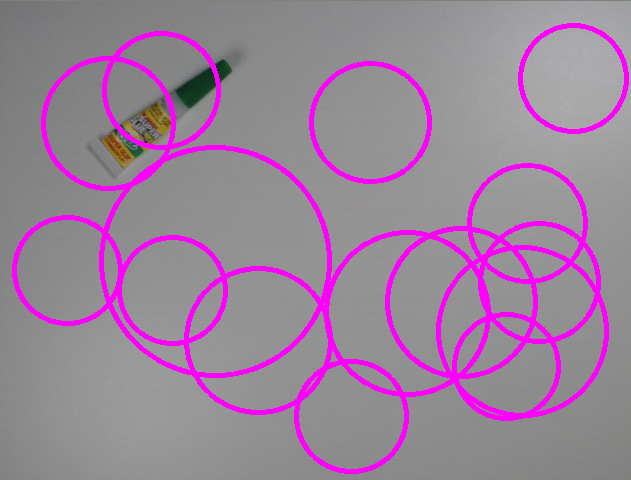

At first, I tried using standard OpenCV solutions, such as a cascade classifier and FLANN feature mapping. These did not work well for the tools I tested because the recognizer cannot rely on a flat image in any orientation or in different parts of the view. Shadows that changed with the orientation proved to be tricky for the feature mapping.

We did some experimenting with Tensorflow too, but we had yet to put it together with an OpenCV program. Experiments were promising, and we decided that machine learning worked better than feature recognition for our problem. Of course, this led to a different challenge: training the tool.

Exploration Can Yield Creative Solutions

Challenge: To train the image recognizer, I needed hundreds of pictures for dozens of tools in different orientations.

Solution: Create a border around the capture area and modify the program to detect a broken border and take pictures. When you reorient the tool, the program automatically takes a new picture (without my hand in the way).

Before we decided to make a tool recognizer, I played around with OpenCV and found that contours and filters on photographs can produce cool, stylized artwork. In one experiment, I manipulated a modern 32bit photo to map to old school EGA colors. Perhaps that was not exceedingly practical, but color picking did give me the inspiration for the capturing tedium solution by taking these simple steps.

Good Software Design is Always Important

Challenge: Have you ever stopped part way through a novel, then tried to pick up where you left off? You end up asking yourself…who are these characters and where is this plot going? I had a similar feeling after picking up Tool Recognizer after a long break.

Solution: To make the code more accessible after a break, or to a new person on the project, I refactored most of the code. I pulled out the user interface code and put it in QML and applied the Model-View-View-Model architecture. With the program properly modularized, it should be much easier to pick it up again later.

Don’t Underestimate The User Interface

Challenge: Make an easy to use and fun UI that will satisfy our team. As GUI developers, 219ers have very high standards. No pressure.

Solution: Since 219 has two Bay Area offices exchanging tools, we need the ability to identify the office, room, and area within the room to pinpoint where the tool belongs. So, I designed a visual to identify that location to show, not tell, the user where it belongs.

By the way, we have another blog post all about best practices for developing a graphical user interface–you should check it out.

What’s Next?

After I demonstrated the app to my coworkers, they had a few suggestions and requests.

- Add an asset tag, QR code, or other visual identifier so it can use multiple types of information to make a decision

- Implement the opposite functionality: you don’t have the tool, but you want to know where to find it.

- Rethink the app for COVID times

What started out as an experiment to learn more about Computer Vision turned into a bigger app. The app gained sight while I gained insight. The Tool Recognizer is a rather young app and has a lot of potential. I look forward to seeing what it will become.